Today marks the official release of a new digital preservation tool developed by The National Archives, CSV Validator version 1.0. This follows on from well known tools such as DROID and PRONOM database used in file identification (discussed in several previous blog posts). The release comprises the validator itself, but perhaps more importantly, it also includes the formal specification of a CSV schema language for describing the allowable content of fields within CSV (Comma Separated Value) files, which gives something to validate against.

The primary purpose of the validator is to check that the metadata we receive with files for preservation meets our requirements. CSV can very easily be produced from standard text editors and spreadsheet programmes (the technical knowledge required for XML has proved a stumbling block). These tools are familiar to most computer users already. On the other hand, this ease of editing means that errors can easily creep in. Historically there hasn’t been much standardisation of CSV files, though the name suggests that a comma is used as the separator between fields, TSV (tab separated values) is also quite common, and other separators are far from rare. The closest thing to a true standard is RFC 4180, so we have made this our default position for files. Even then, there was no agreed way to describe the content of fields, for example, to specify that one field should contain a date (in a particular range), another an address, another a catalogue reference and so on.

For born digital material received from other government departments the CSV metadata will often take the form of a report from DROID which lists the files and their file format, with some system information, perhaps enhanced with some additional description of the records which will ultimately be passed through to Discovery, our catalogue, to enable searching for the files. DROID also produces a checksum for each file which enables us to verify that there have been no errors in transferring the files to The National Archives (or if we do find errors, we know we need to request replacement files). However, for digitisation projects we require a wide range of metadata, sufficient that we can directly relate an image file back to the original document from which it was created, and demonstrate the various actions taken during the digitisation process from the original image capture via cropping, deskewing and conversion of the image to our master preservation format. This is particularly important when we will only accession the image files, so we need to be able to demonstrate the integrity of the digitisation project by capturing a full provenance trail. We will generally also want to link the images up to information transcribed from them.

This provided us with the starting point for development. We first made use of CSV validation in the project to put the County Durham Home Guard records online (WO 409). However, the scripts used were specific to that project, so we needed to generalise the approach to handle a wider range of data and validation tests. At that time we were very lucky to have a very skilled contractor working with us, Adam Retter, and he created the formal specification of the schema language. This was created in Extended Backus–Naur Form, setting out the grammar of the formal language to be used for the schemas. With this in place, we could then develop an application to validate CSV files according to these schemas.

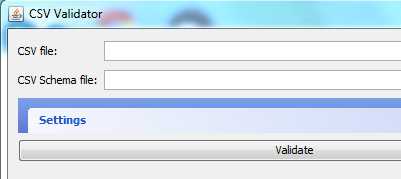

Development was in Scala. Scala programs run in the Java Virtual Machine, which means our validator can run on any platform which has Java support. However, the code is typically more compact than Java, and Scala has a mechanism called Parser Combinators, which allow quick construction of complex parsers (such as that needed to parse CSV Schema). A simple Swing GUI (Graphical User Interface) was created, or the program can be run from the command line. There is also an API to allow calling direct from Java applications or other Scala programs.

So, what does a schema look like, and what sort of validation can actually be performed? A schema is divided into two major parts, the prolog and the body. In the prolog, we state the version of the schema language to be used with the statement version 1.0; then we can set some options for the overall processing of a file: is there a header row in the file before the first data row; is the separator actually a comma, or some other character; are all columns quoted; and how many columns are expected. If the options are not specified, default behaviour will be assumed (based on RFC 4180), or in the case of specifying the number of columns, this causes the validator to check that there are actually that number of column validation rules in the schema. The body then comprises one column definition per column of your CSV file, each of which may be explained with a comment. In turn, a column definition comprises a column identifier and a column rule. A column rule may be empty if there is no particular validation requirement. So the minimal syntactically valid schema is:

version 1.0

1:

Of course this isn’t actually terribly useful, describing a CSV file (with a header row) of a single column which can contain anything! More helpful as an example is:

version 1.0

@totalColumns 3

name: notEmpty /*a name must be given, but we don't specify anything else about what constitutes a valid name*/

age: range(0, 120) and positiveInteger /*ages must be a number between 0 and 120, and can only be whole numbers, no half years etc*/

gender: is("m") or is("f") /*for this example, only binary male/female gender accepted, expressed as the single characters m or f*/

The example shows a handful of the different validation tests that can be employed, the full range can be found in the CSV Schema Specification. Most will be self explanatory, but it’s probably worth mentioning two that are particularly important in the validation of metadata files, fileExists and checksum. The first, unsurprisingly, takes a filepath from the metadata file and ensures that there is actually a file at the location described by the filepath. The second takes a filepath and a checksum value from the metadata and verifies that the checksum for the file does actually correspond to the value recorded in the metadata (this gives us confidence that the file has not been corrupted in transit). The checksum test also points to another aspect of the validator, normally a test examines the value of the current column, but there are cases where we wish to crosscheck with another column, we refer to another column by preceding its column_identifier with the $ sign. So if we were to add a fourth column to the example schema above:

version 1.0

@totalColumns 4

name: notEmpty /*a name must be given, but we don't specify anything else about what constitutes a valid name*/

age: range(0, 120) and positiveInteger /*ages must be a number between 0 and 120, and can only be whole numbers, no half years etc*/

gender: is("m") or is("f") /*for this example, only binary male/female gender accepted, expressed as the single characters m or f*/

title: if($gender/is("m"),is("Mr"),is("Mrs") or is("Ms") or is("Miss")) /*for this example, if the gender column has the value "m" then the title must be "Mr", else the title may be either "Mrs", "Ms" or "Miss*/

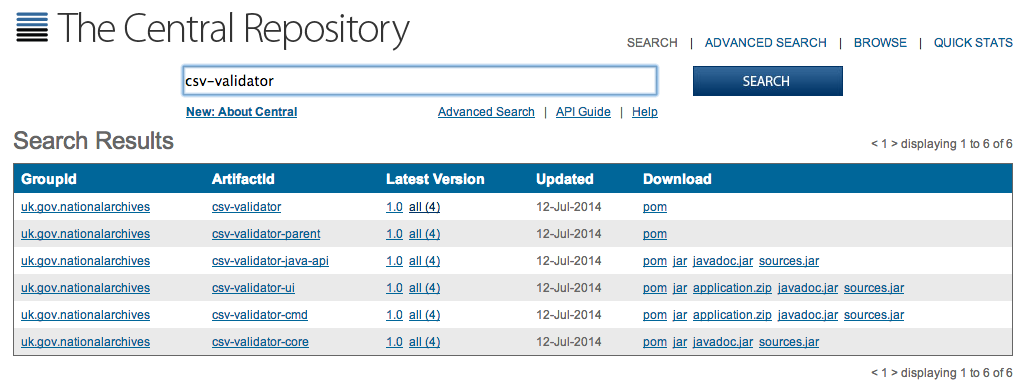

The CSV Validator is open source software under the Mozilla Public Licence version 2.0 (MPL2), the source code can be found in the CSV-Validator project on GitHub, and the executables on Maven Central. Full installation and usage instructions are provided, along with the full CSV Schema specification. The CSV Schema also has its own Github project (again under MPL2). Example schema and CSV files can be found in the CSV Schema project. Contributions from other developers are encouraged.

Today, in addition to this blog post, Adam Retter is talking about the project at csv,conf in Berlin, in a session called CSV Validation at the UK National Archives (his complete set of slides can be found on slides.com). This is a fringe event of the Open Knowledge Festival. We are not the only group to have been looking at the issue of CSV specification and validation, it’s an idea that seems to have reached a critical mass. A key group is the CSV on the Web Working Group. This is looking at a variety of use cases for the use of CSV data, and is looking at schemas expressed in JSON, and converting CSV data directly to linked open data, which is beyond our requirements at The National Archives.

[…] CSV validator – a new digital preservation tool […]

We’ve released version 1.1 of both the schema language and validator today (25 January 2016). The link given for the validator above will now take you to the new version, the updated schema language specifcation is to be found at http://digital-preservation.github.io/csv-schema/csv-schema-1.1.html

This is an awesome development. Is there a news group / chat group where we can collaborate? I have downloaded and compiled and started working with the kit. Found issues with Windows vs Unix files (CRLF) vs (LF) and would like to understand how one can make the tool faster (we have massive 12-50GB files to validate)

Hardy, glad you like it. The GitHub project https://github.com/digital-preservation/csv-validator is probably the easiest place currently to raise any issues, or though strictly for one of our other tools you’ll find an email address that will reach the team on http://www.nationalarchives.gov.uk/information-management/manage-information/preserving-digital-records/droid/.

We’ve not run it on anything quite so big, 300-400MB is probably the biggest. You may find increasing Java heap size helps, particularly if you’re using the unique test at all, I had to increase max heap to 6 or 8 GB to get the 400MB files through, but those didn’t have multiple unique constraints within the schema.