‘Baffled by archives’ is a three-part blog series addressing the issues and challenges that we encounter as we progress with Project Alpha, introduced in November in Building an archive for everyone. Part one considered why visitors to The National Archives on the web are confused. In part two we began to look at ways of helping them out. In this final part we look at possible solutions.

Intelligent systems

One solution to the bafflement problem is to sit the user next to a kind and infinitely patient archivist while they go about their exploration. The archivist can watch what the user is doing and see where they are getting stuck. They can suggest different finding strategies, run a few queries in the background related to the user’s current activity to see if those queries fare better, and show the user any that look promising. They can explain what they are looking at as it comes up and how to delve deeper. They can give an indication of the nature and extent of the content the user is brushing up against, suggest other locations that might yield relevant information, and show the user where they are in the structure and what that structure means.

Photo by Omar Flores on Unsplash

Maybe the patient archivist can handle the complexities of feeding advanced search just what it needs on the user’s behalf, and keep their direct interaction simple. This patient archivist could ask the user a few questions as they explore, reacting to what they think the user is after and using their knowledge of what has worked in the past for others. They could recognise keywords in search terms and suggest additional background reading, and promote particular research help if it doesn’t seem possible to incorporate the knowledge into the discovery interface.

At the moment, the web visitor’s alternative to the infinitely patient archivist is to absorb this expertise by careful reading of research guides, or maybe through live chat or a phone call.

It seems a tall order to create an intelligent system that does all this, that is perceived as useful, that doesn’t annoy seasoned researchers, Clippy-style. ‘I think you are looking for Prize Papers: here are a few places you could look and queries you can try, and you can read this guide for more detail.’

Experiments towards such an assisted service design could be attempted – learning from the combinations of search terms, description text and locations in the hierarchy what best to suggest to users. We could make the server do far more work on the user’s behalf, gently train the user to establish the mental model, and gradually step back as their searching looks like it’s yielding results. We could ask the user about the relevance of the results they are getting to learn what relevant results feel like.

Can we apply some machine learning to this problem? What information would be used for training assistance, how is that information evaluated, and what does the resulting assistance look like? What pathways through the archives are being reinforced by this learning, how do we collect evidence of success from users in a helpful and non-distracting way, and how do we know that this learning is applicable to the knowledge journeys of other users? The well-trodden pathway reinforced by the feet of many amateur genealogists might be less useful or even counterproductive for an experienced researcher working in a familiar collection.

In a search engine like Google, machine learning is at work in autocomplete, in result rankings, and in targeting adverts. Instead of advertising, we could target more structured help, ‘promo’ explainers and suggestions for further searches.

In a search engine like Google, machine learning is at work in autocomplete, in result rankings, and in targeting adverts. Instead of advertising, we could target more structured help, ‘promo’ explainers and suggestions for further searches.

This is really hard! Designing and testing alternative ways of conveying hierarchy, designing and testing multiple generous interface approaches that sit alongside the hierarchy, combining digitised content where available with archive description as material that generates more navigation up, down and across hierarchies – these seem like easier prospects than an intelligent exploration service.

But maybe there are some tractable approaches to this that could be tried. And maybe it’s not completely disjoint from live chat – there will be questions that only a human archivist could answer – ‘after all this exploring with intelligent assistance, do you think it’s worth me paying a visit to Kew to have a look at this?’

We could start by designing interfaces that we think would be shown to users if machine learning processes were at work, and test them, and see how we could make the successful ones appear with less human contrivance. We’ll also be often surprised by what works for users, what contributes to overall satisfaction in journeys through the archive (if satisfaction is the right metric).

The application of more familiar generous interface techniques is not incompatible with intelligent assistance; ideally the two reinforce each other: those elements that might start out as ‘smoke and mirrors’ AI for establishing what works and what doesn’t can evolve into more widespread assistance across a greater extent of the archive.

Another approach that could be tried, either with these techniques or independently, is to assist users by giving them a sense of how much there is to know about a particular topic. The Financial Times has a Knowledge Builder tool (also known as ‘Topic Tracker’). This gives users a sense of how much they have read on a given subject, which in turn gives a sense of that subject’s scale, the depth of material that might be found relating to it.

This could be given an archival twist by helping to get across the size of different collections. This needn’t be intrusive, and could be used to decorate the user’s view of the hierarchy and their place within it through visualisations of the size of branches that they have explored, and that they haven’t seen. How much ground have I covered in this topic? This doesn’t have to be tied to authenticated users, although it may be more effective for them as it would build their understanding of what they have seen across different devices and longer spans of time.

A ‘topic’ in this sense could be a level in the hierarchy of one collection, or a topic that cuts across many collections (combining generous interface elements), or a mixture of both. How many branches of this tree have I seen, and how many branches relating to these topics are there in other trees? Users with accounts could get an overview of their exploration from a dashboard, which could help them plan further exploration and see suggestions.

This could later grow into more offerings for members: enthusiastic users of the archives sometimes enjoy the feeling of working together, making contributions that aid the understanding of others; this works in person, in a room together, learning about a particular topic. Could we make more of this for members, add a layer of additional content alongside the archival description that builds a sense of community around collections, without losing sight of the importance of the official record?

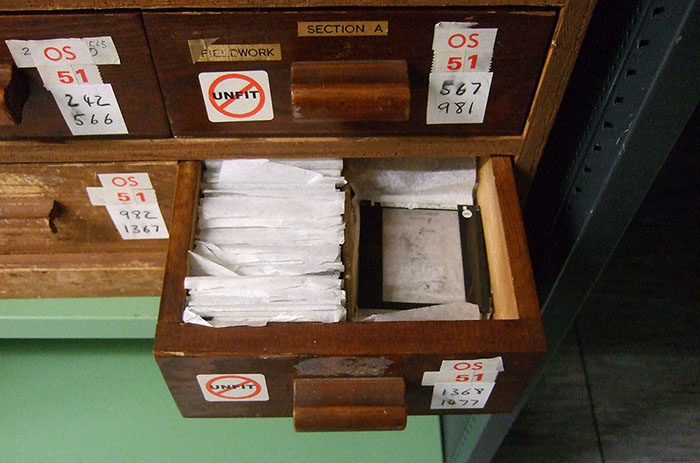

What would this sort of functionality look like in an archive, where it could be combined with visualisations of hierarchy? Data about the size of branches in the hierarchy exist, but not in a direct form. The document ordering system knows where things are, how they are shelved, and generates information about the movement of physical objects. We can start to look at whether this data could be harnessed for visualisation.

This sometimes applies to digitised things, but not limited to them. Our visualisations can be more abstract. And if we do explore this route those visualisations must be simple, helpful and accessible.

On the web, the materiality of the archive is projected into millions of description pages; this levels the archive (one web page feels just like another). Combining visualisations of structure with visualisations of how much of that the user has actually visited could be a powerful tool in establishing the mental model and the huge differences in quantities of content in different parts of the archive.

Happy exploring

Users should expect the kind of world class service and content design that informs public and commercial exemplar service offerings (whether transferring money via online banking, registering to vote, ordering a new bin, buying a book, or whatever). This should encompass all their transactions with The National Archives, for those preparing for a visit for research, and for those whose interaction will be online only; for those landing on a catalogue record at random from a search engine and for those exploring their way around the site and the archives; for those who might encounter digitised material in their travels, and for those whose relevant subject material has not been digitised.

These three posts have looked at one aspect of this – the catalogue. While there are always lessons to be learned from web search engines, user behaviour in archives is very different. Search is not just the means to an end, to be over and done with as quickly as possible because the goal has been achieved. Exploration of the archive is an intellectual activity in its own right; searching and browsing build understanding of what is there, how it is arranged and what it means. The challenge is to instil confidence in the user that they understand what they are doing, and make the journey rewarding.

There are no easy answers to these questions! We hope the journey will lead us to some innovative and powerful approaches to demystifying and making accessible The National Archives’ collections.

As we progress with Project Alpha, we’re looking to test some of the concepts with people new to The National Archives. If that sounds like you, we’d welcome your help! Register your interest here.